How to scrape the web with JavaScript

By Peter Beshai

December 02, 2019

Whether you're a student, researcher, journalist, or just plain interested in some data you've found on the internet, it can be really handy to know how to automatically save this data for later analysis, a process commonly known as "scraping".

There are several different ways to scrape, each with their own advantages and disadvantages and I'm going to cover three of them in this article:

- Scraping a JSON API

- Scraping sever-side rendered HTML

- Scraping JavaScript rendered HTML

For each of these three cases, I'll use real websites as examples (stats.nba.com, espn.com, and basketball-reference.com respectively) to help ground the process. I'll go through the way I investigate what is rendered on the page to figure out what to scrape, how to search through network requests to find relevant API calls, and how to automate the scraping process through scripts written in Node.js. We'll even try out curl and jq on the command line for a bit.

So without further adieu, let's begin with a quick primer on CSV vs JSON.

Note these instructions were written with Chrome 78 and will likely vary slightly with different browsers.

Table of Contents

I know CSV, but what is JSON?

You may be familiar with CSV files as a common way of working with data, but for the web, the standard is to use JSON. There are plenty of tools online that can convert between the two formats so if you need a CSV, getting from one to the other shouldn't be a problem. Here's a brief comparison of how some data may look in CSV vs JSON:

ID,Name,Season,Points1,LeBron James,2019-20,25.21,LeBron James,2018-19,27.41,LeBron James,2017-18,27.5

[{ "id": 1, "name": "LeBron James", "season": "2019-20", "points": 25.2 },{ "id": 1, "name": "LeBron James", "season": "2018-19", "points": 27.4 },{ "id": 1, "name": "LeBron James", "season": "2017-18", "points": 27.5 }]

So there's a bunch more symbols and the headers are integrated into each item in the JSON version. While this is a more common JSON format, some times we'll see data in other arrangements too, which are more similar to CSV. For example, stats.nba.com uses a format similar to:

{"headers": ["id", "name", "season", "points"],"rows": [[1, "LeBron James", "2019-20", 25.2],[1, "LeBron James", "2018-19", 27.4],[1, "LeBron James", "2017-18", 27.5]]}

Learning to read and understand this format will go a long way to helping you work with data on the web.

Case 1 – Using APIs Directly

A very common flow that web applications use to load their data is to have JavaScript make asynchronous requests (AJAX) to an API server (typically REST or GraphQL) and receive their data back in JSON format, which then gets rendered to the screen. In this case, we'll go over a method of intercepting these API requests and work with their JSON payloads directly via a script written in Node.js. We'll use stats.nba.com as our case study to learn these techniques.

Find the relevant API requests

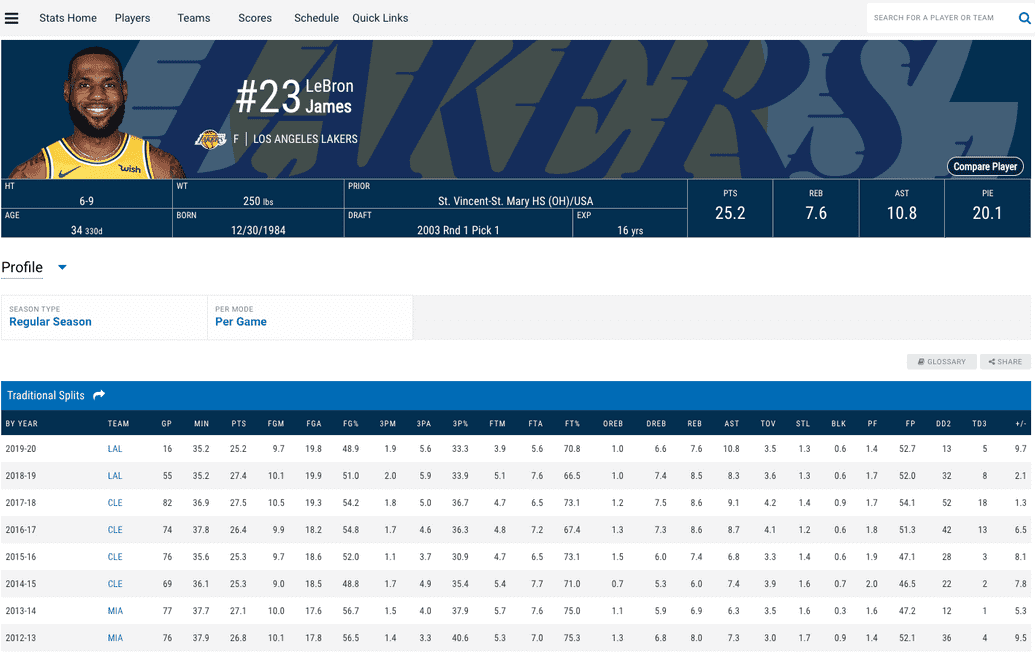

Okay with some preliminary understanding of data formats under our belt, it's time to take a stab at scraping some real data. Our goal will be to write a script that will save LeBron James' year-over-year career stats.

Step 1: Check if the data is loaded dynamically

Let's head on over to stats.nba.com and find the page with the stats we care about, in this case LeBron's player page: https://stats.nba.com/player/2544/

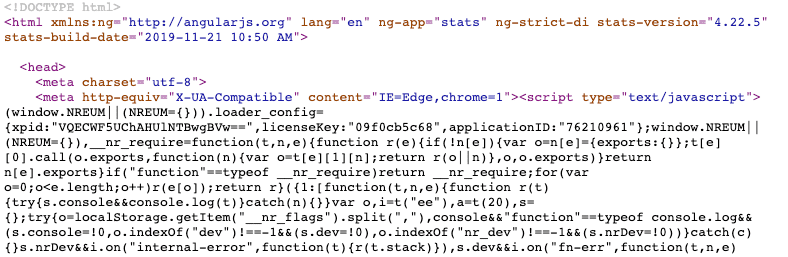

Hey! There's our table right up top on LeBron's page, how convenient. We need to check if this data is already in the HTML or if it is loaded dynamically via a JSON API. To do so, we'll View Source on the page and try to find the data in the HTML.

With the source loaded, we can look for the data by searching (via Cmd+F) for some values that show up in it (e.g. 2017-18 or 51.3). Alas, there are no results! This confirms that the data is loaded dynamically, likely via a JSON API.

Step 2: Find the API endpoint that returns the data

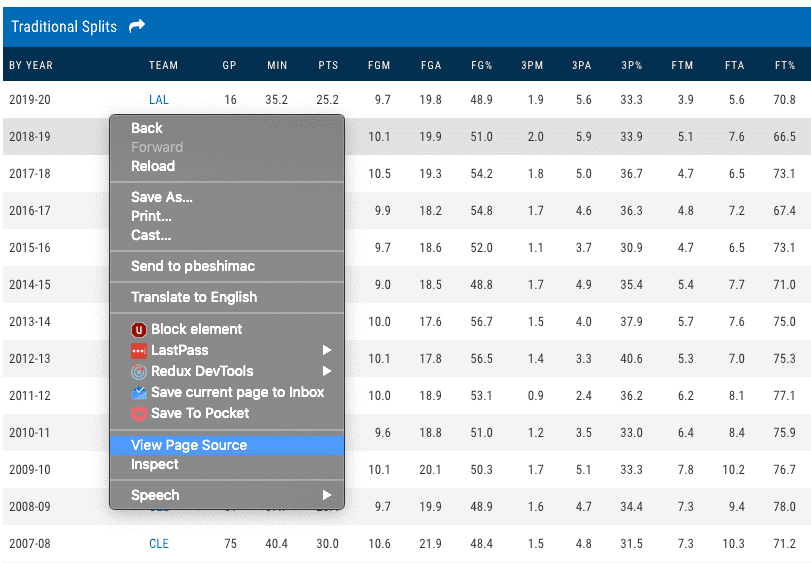

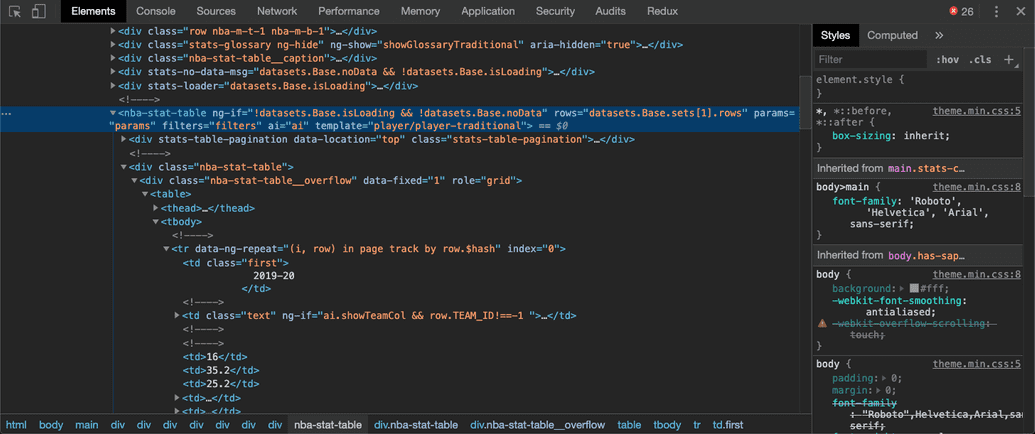

OK, so the data isn't in the HTML, where the heck is it? Let's inspect the element with our browser's developer tools to see if we can find any clues. Right click the table and select Inspect.

We're in luck! Looks like that tag nba-stat-table has some clues in it. This doesn't always happen, but it sure helps when it does.

<nba-stat-tableng-if="!datasets.Base.isLoading && !datasets.Base.noData"rows="datasets.Base.sets[1].rows"params="params"filters="filters"ai="ai"template="player/player-traditional">

In particular, it references datasets.Base and datasets.Base.sets[1].rows. We now have to hunt through the network calls made after the page has loaded to see if we can find the matching API request. To do so, load up the Network tab in the browser's developer tools and refresh the page to capture all requests.

Note the tools should already be open from when you clicked Inspect to load the HTML inspector. Network is just another tab in that panel. Otherwise you can go to View → Developer → Developer Tools to open them.

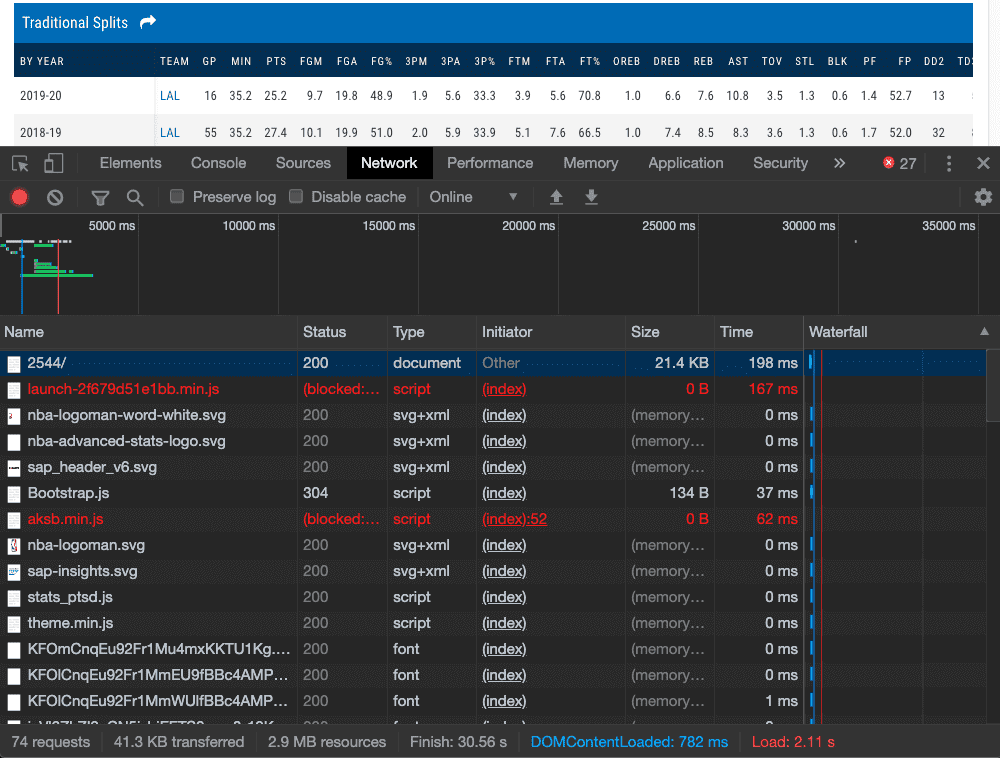

After refreshing the page, your network tab should be full of stuff as shown below.

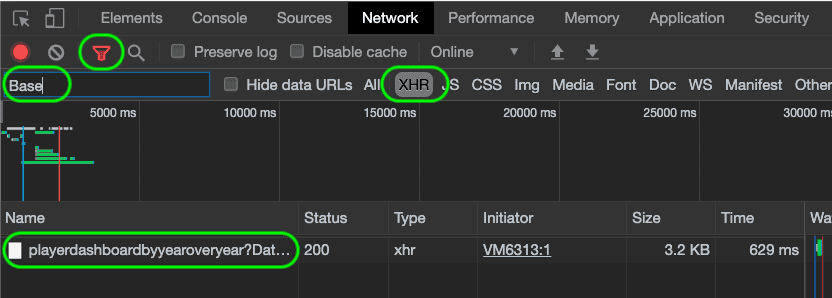

These are all the requests the browser makes to render the page after loading the initial HTML. Let's try and filter it down to something more manageable to sift through. Click the filter icon (third from the left for me) to reveal a filter panel. Select the XHR button to filter out everything (e.g., images, videos) except API requests. Then let's cross our fingers and try filtering for the word "Base" since that seems to be the dataset we care about based on the HTML we found above.

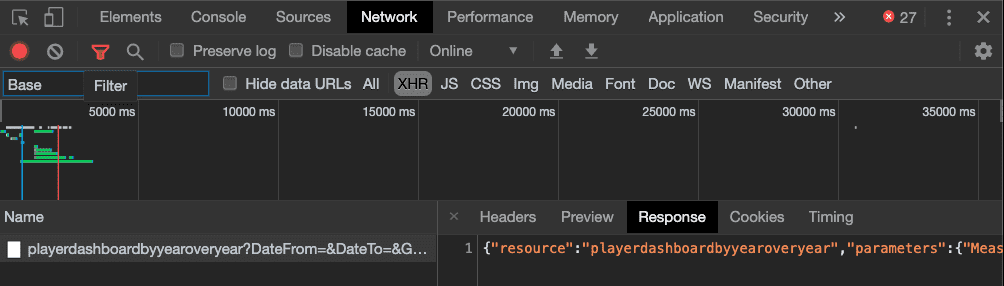

Ooo looks like we've got something. Click the row playerdashboardbyyearoveryear then Response to see what's in it.

Yikes! That's not very easy to read, but hey it sure looks like JSON. At this point, I take the text of the response and copy and paste it into a formatter so I can get a better understanding of what's in it. I use my text editor for this, but googling for an online JSON formatter will work just as well.

{"resource": "playerdashboardbyyearoveryear","parameters": { "MeasureType": "Base", ... },"resultSets": [{ "name": "OverallPlayerDashboard", ... },{"name": "ByYearPlayerDashboard","headers": [ "GROUP_SET", "GROUP_VALUE", "TEAM_ID","TEAM_ABBREVIATION", "MAX_GAME_DATE", "GP", "W", "L","W_PCT", "MIN", "FGM", "FGA", "FG_PCT", ... ],"rowSet": [[ "By Year", "2019-20", 1610612747,"LAL", "2019-11-23T00:00:00", 16, 14, 2,0.875000, 35.2, 9.700000, 19.800000, 0.489, ... ],...]}]}

Recalling the HTML we inspected from earlier, we were looking for a dataset named "Base" and the second set (sets[1] from before) in it to find our table data. With some careful inspection, we can see that the second item in the resultSets entry in this response matches the data for our table. We have now confirmed this is the API request we're interested in scraping.

Download the response data with cURL

Now that we know how to manually find the data we care about, let's work on automating it with a script. We'll be using the terminal (Applications/Utilities/Terminal on a Mac) now to quickly iterate with the tools curl and jq.

Note you may need to install jq if you do not already have it. The easiest way is with Homebrew. Run brew install jq on the command line to get it.

Combining these tools with a bash script is probably sufficient for a bunch of scraping needs, but in this article we'll migrate over to using node.js after figuring out the exact request we want to make.

Ok, so back to the Network tab in the browser's developer tools. Right click the "playerdashboardyearoveryear" row and select Copy → Copy as cURL. This will copy something like this to your clipboard:

curl 'https://stats.nba.com/stats/playerdashboardbyyearoveryear?DateFrom=&DateTo=&GameSegment=&LastNGames=0&LeagueID=00&Location=&MeasureType=Base&Month=0&OpponentTeamID=0&Outcome=&PORound=0&PaceAdjust=N&PerMode=PerGame&Period=0&PlayerID=2544&PlusMinus=N&Rank=N&Season=2019-20&SeasonSegment=&SeasonType=Regular+Season&ShotClockRange=&Split=yoy&VsConference=&VsDivision=' \-H 'Connection: keep-alive' \-H 'Accept: application/json, text/plain, */*' \-H 'x-nba-stats-token: true' \-H 'X-NewRelic-ID: VQECWF5UChAHUlNTBwgBVw==' \-H 'User-Agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36' \-H 'x-nba-stats-origin: stats' \-H 'Sec-Fetch-Site: same-origin' \-H 'Sec-Fetch-Mode: cors' \-H 'Referer: https://stats.nba.com/player/2544/' \-H 'Accept-Encoding: gzip, deflate, br' \-H 'Accept-Language: en-US,en;q=0.9,id;q=0.8' \-H 'Cookie: a_bunch_of_stuff=that_i_removed;' \--compressed

If you paste that into your terminal, you'll see the JSON response show up. Nice!! But boy there's a bunch of stuff that we're adding to this command: HTTP Headers with the -H flag. Do we really need all of that? It turns out the answer is maybe. Some web servers will take precautions to protect against bots, while still allowing normal users to access their sites. To appear as a normal user while we scrape, we sometimes need to include all kinds of HTTP headers and cookies.

I'd like the request to be as simple as possible, so at this point I try and remove as many of the extra options while still having the request work. This results in the following:

curl 'https://stats.nba.com/stats/playerdashboardbyyearoveryear?DateFrom=&DateTo=&GameSegment=&LastNGames=0&LeagueID=00&Location=&MeasureType=Base&Month=0&OpponentTeamID=0&Outcome=&PORound=0&PaceAdjust=N&PerMode=PerGame&Period=0&PlayerID=2544&PlusMinus=N&Rank=N&Season=2019-20&SeasonSegment=&SeasonType=Regular+Season&ShotClockRange=&Split=yoy&VsConference=&VsDivision=' \-H 'Connection: keep-alive' \-H 'User-Agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36' \-H 'x-nba-stats-origin: stats' \-H 'Referer: https://stats.nba.com/player/2544/' \--compressed

Now we can get some nice colors and formatting by simply piping the result to jq: curl ... | jq. But jq is awesome and powerful and let's us do a lot more. For example, we can ask jq to get us the top row in the second result set by running the curl command piped with curl ... | jq .resultSets[1].rowSet[0]:

curl 'https://stats.nba.com/stats/playerdashboardbyyearoveryear?DateFrom=&DateTo=&GameSegment=&LastNGames=0&LeagueID=00&Location=&MeasureType=Base&Month=0&OpponentTeamID=0&Outcome=&PORound=0&PaceAdjust=N&PerMode=PerGame&Period=0&PlayerID=2544&PlusMinus=N&Rank=N&Season=2019-20&SeasonSegment=&SeasonType=Regular+Season&ShotClockRange=&Split=yoy&VsConference=&VsDivision=' \-H 'Connection: keep-alive' \-H 'User-Agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36' \-H 'x-nba-stats-origin: stats' \-H 'Referer: https://stats.nba.com/player/2544/' \--compressed | jq .resultSets[1].rowSet[0]

[ "By Year", "2019-20", 1610612747, "LAL", "2019-11-23T00:00:00", 16,14, 2, 0.875, 35.2, 9.7, 19.8, 0.489, 1.9, 5.6, 0.333, 3.9, 5.6,0.708, 1, 6.6, 7.6, 10.8, 3.5, 1.3, 0.6, 0.8, 1.4, 4.5, 25.2, 9.7,52.7, 13, 5, 17, 17, 17, 1, 17, 14, 8, 11, 2, 2, 11, 17, 17, 14, 14,5, 7, 1, 7, 15, 16, 9, 17, 15, 16, 2, 6, 15, 6, 264, "2019-20" ]

curl ... | jq .resultSets[1].rowSet[0]Look at us go! Everything is all right, everything is automatic. But we miss JavaScript, so let's head on over to Node land.

Write a Node.js script to scrape multiple pages

At this point we've figured out the URL and necessary headers to request the data we want. Now we have everything we need to write a script to scrape the API automatically. You could use whatever language you want here, but I'll do it using node.js with the request library.

In an empty directory, run the following commands in your terminal to initialize a javascript project:

npm init -ynpm install --save request request-promise-native

Now we just need to translate our request from the cURL format above to the format needed by the request library. We'll use the promise version so we can take advantage of the very convenient async/await syntax, which let's us work with asynchronous code in a very readable way. Put the following code in a file called index.js.

const rp = require("request-promise-native");const fs = require("fs");async function main() {console.log("Making API Request...");// request the data from the JSON APIconst results = await rp({uri: "https://stats.nba.com/stats/playerdashboardbyyearoveryear?DateFrom=&DateTo=&GameSegment=&LastNGames=0&LeagueID=00&Location=&MeasureType=Base&Month=0&OpponentTeamID=0&Outcome=&PORound=0&PaceAdjust=N&PerMode=PerGame&Period=0&PlayerID=2544&PlusMinus=N&Rank=N&Season=2019-20&SeasonSegment=&SeasonType=Regular+Season&ShotClockRange=&Split=yoy&VsConference=&VsDivision=",headers: {"Connection": "keep-alive","User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36","x-nba-stats-origin": "stats","Referer": "https://stats.nba.com/player/2544/"},json: true});console.log("Got results =", results);// save the JSON to diskawait fs.promises.writeFile("output.json", JSON.stringify(results, null, 2));console.log("Done!")}// start the main scriptmain();

Now run this on the command line with

node index.js

Boom, we replaced a 1-liner from the command line with 30 lines of JavaScript. We're so modern!

Getting this data for other players

Cool, so we've got LeBron's data being downloaded from the API automatically, but how about we get it going for a few other players too, so we can feel real productive. You'll notice that the API URL includes a PlayerID query parameter:

https://stats.nba.com/stats/playerdashboardbyyearoveryear?

DateFrom=&DateTo=&GameSegment=&LastNGames=0&LeagueID=00&

Location=&MeasureType=Base&Month=0&OpponentTeamID=0&Outcome=&

PORound=0&PaceAdjust=N&PerMode=PerGame&Period=0&

PlayerID=2544&

PlusMinus=N&Rank=N&Season=2019-20&SeasonSegment=&

SeasonType=Regular+Season&ShotClockRange=&Split=yoy&

VsConference=&VsDivision=

We just need figure out the IDs of the other players we're interested in, modify that parameter and we'll be good to go. I opened a few player pages on stats.nba.com and took the number from the URL to map these IDs to players:

| Player ID | Player | URL |

|---|---|---|

| 2544 | LeBron James | https://stats.nba.com/player/2544/ |

| 1629029 | Luka Doncic | https://stats.nba.com/player/1629029/ |

| 201935 | James Harden | https://stats.nba.com/player/201935/ |

| 202695 | Kawhi Leonard | https://stats.nba.com/player/202695/ |

We can modify our script above to use the player ID as a parameter:

const rp = require("request-promise-native");const fs = require("fs");// helper to delay execution by 300ms to 1100msasync function delay() {const durationMs = Math.random() * 800 + 300;return new Promise(resolve => {setTimeout(() => resolve(), durationMs);});}async function fetchPlayerYearOverYear(playerId) {console.log(`Making API Request for ${playerId}...`);// add the playerId to the URI and the Referer header// NOTE: we could also have used the `qs` option for the// query parameters.const results = await rp({uri: "https://stats.nba.com/stats/playerdashboardbyyearoveryear?DateFrom=&DateTo=&GameSegment=&LastNGames=0&LeagueID=00&Location=&MeasureType=Base&Month=0&OpponentTeamID=0&Outcome=&PORound=0&PaceAdjust=N&PerMode=PerGame&Period=0&" +`PlayerID=${playerId}` +"&PlusMinus=N&Rank=N&Season=2019-20&SeasonSegment=&SeasonType=Regular+Season&ShotClockRange=&Split=yoy&VsConference=&VsDivision=",headers: {"Connection": "keep-alive","User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36","x-nba-stats-origin": "stats","Referer": `https://stats.nba.com/player/${playerId}/`},json: true});// save to disk with playerID as the file nameawait fs.promises.writeFile(`${playerId}.json`,JSON.stringify(results, null, 2));}async function main() {// PlayerIDs for LeBron, Harden, Kawhi, Lukaconst playerIds = [2544, 201935, 202695, 1629029];console.log("Starting script for players", playerIds);// make an API request for each playerfor (const playerId of playerIds) {await fetchPlayerYearOverYear(playerId);// be polite to our friendly data hosts and// don't crash their serversawait delay();}console.log("Done!");}main();

I mostly just extracted the request into its own async function called fetchPlayerYearOverYear and then looped over an array of IDs to fetch them all. As a courtesy to our beloved data hosts, I like to put in a delay after each fetch to make sure I am not bombarding their servers with too many requests at once. I hope this prevents me from being blacklisted for spamming requests to take their data for my own entirely benevolent purposes.

And with that, we've successfully scraped a JSON API using Node.js. One case down, two to go. Let's move on to covering scraping HTML that's rendered by the web server in Case 2.

Case 2 – Server-side Rendered HTML

Besides getting data asynchronously via an API, another common technique used by web servers is to render the data directly into the HTML before serving the page up. We'll cover how to extract data in this case by downloading and parsing the HTML with the help of Cheerio.

Find the HTML with the data

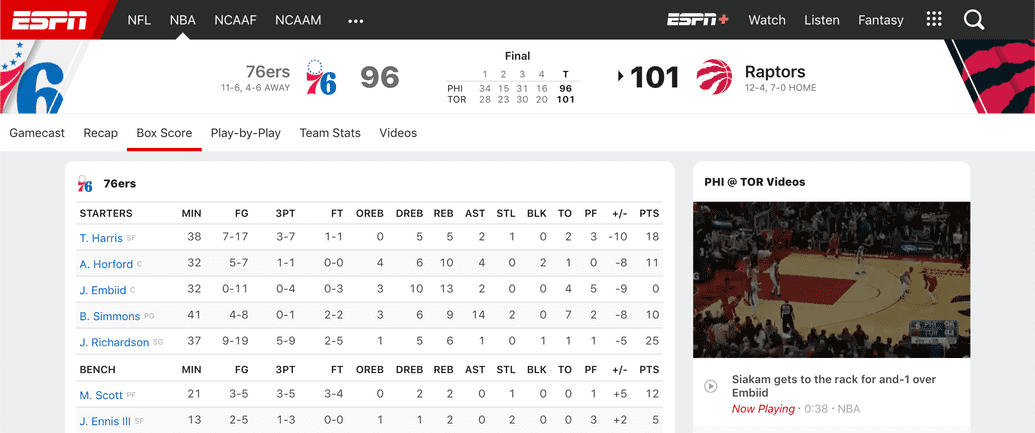

In this case, we'll go through an example of extracting some juicy data from the HTML for NBA box scores on espn.com.

Step 1: Verify the data comes loaded with the HTML

Similar to Case 1, we're going to want to verify that the data is actually being loaded with the page. We are going to scrape the box scores for a basketball game between the 76ers and Raptors available at: https://www.espn.com/nba/boxscore?gameId=401160888.

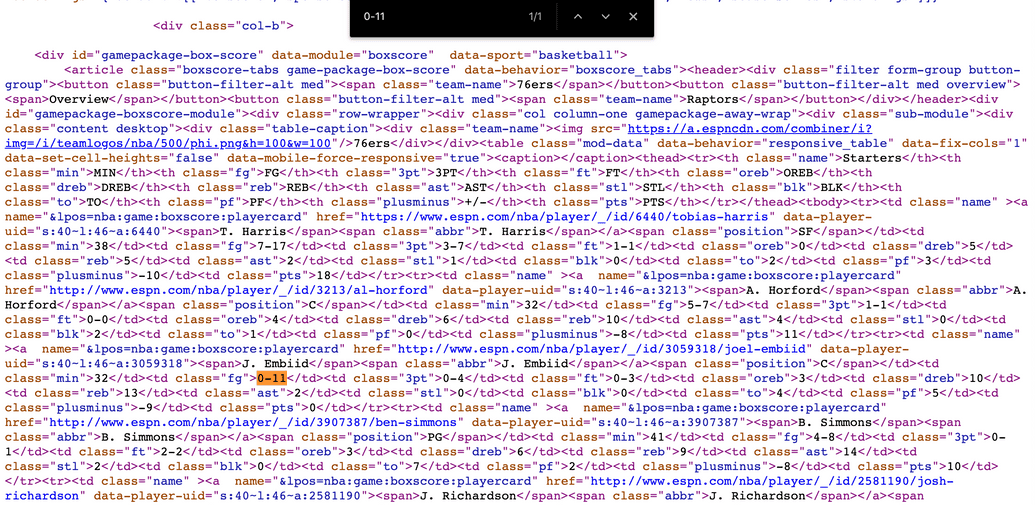

To do so, we'll view the source of the page we're investigating, as we did in Case 1, and search for some of the data (Cmd+F). In this case, I searched for the remarkable 0-11 stat from Joel Embiid's row.

There it is! Right in the HTML, practically begging to be scraped.

Step 2: Figure out a selector to access the data

Now that we've confirmed the data is in the actual HTML on page load, we should take a moment to figure out how to access it in the browser. We need some way to identify programmatically the section of the HTML we care about, something CSS Selectors are great at. This can get a bit messy and can often end up being pretty fragile, but such is life in the world of sraping.

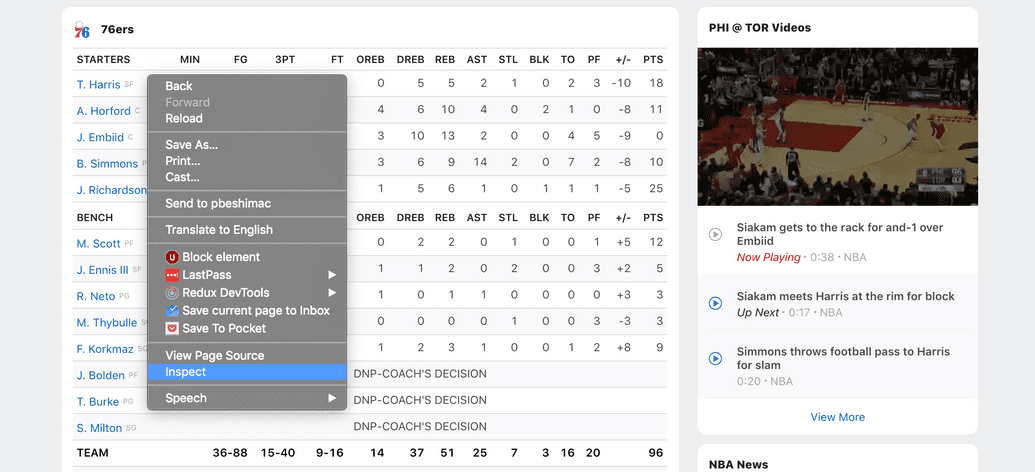

Let's go back to the web page, right click the table and select Inspect.

You should end up seeing something similar to what is shown below:

What we want is a selector that will give us all rows (or <tr>s) in the table. We'll use the handy document.querySelectorAll() function to iterate until we get what we want. Let's start with something general and get more and more specific until we only have what we need. In the browser console (View → Developer → Javascript Console), we can interactively try our selectors until we get the right one. Enter the following:

document.querySelectorAll('tr')

This outputs:

> NodeList(41) [tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr.highlight, tr.highlight, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr.highlight, tr.highlight, tr, tr, tr.highlight, tr.highlight, tr, tr]

Well, using "tr" is too general. It's returning 41 rows, but the 76ers only have 13 players in the table. Whoops. Looking back at the inspected HTML, we can see some IDs and classes of parent elements to the <tr>s that we can use to winnow down our selection.

<div id="gamepackage-boxscore-module"><div class="row-wrapper"><div class="col column-one gamepackage-away-wrap"><div class="sub-module"><div class="content desktop"><div class="table-caption">...</div><table class="mod-data" data-behavior="responsive_table" data-fix-cols="1" data-set-cell-heights="false" data-mobile-force-responsive="true"><caption></caption><thead>...</thead><tbody><tr>...</tr><tr>...</tr>...

document.querySelectorAll('.gamepackage-away-wrap tbody tr')> NodeList(15) [tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr.highlight, tr.highlight]

Getting pretty close, looks like we just have a couple of rows with the class highlight added to them at the end. On closer inspection, they are the summary total rows at the bottom of the table. We can update our selector to filter them out or we can do that later when writing the Node.js script. Let's just get rid of them with the selector.

document.querySelectorAll('.gamepackage-away-wrap tbody tr:not(.highlight)')> NodeList(13) [tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr, tr]

All right! Looks like we've got a selector to get us all the player rows for the away team from the box score. Good enough to move on to scripting.

Write a Node.js script to scrape the page

So we've got our selector to get all the rows we care about from the Box Score table (.gamepackage-away-wrap tbody tr:not(.highlight)) and we've got the URL (https://www.espn.com/nba/boxscore?gameId=401160888) of the page we want to scrape. That's all we need, so let's go ahead and scrape.

Download the HTML page

Things start off exactly the same as we did in Case 1. In an empty directory, run the following commands in your terminal to initialize a javascript project:

npm init -ynpm install --save request request-promise-native

I always prefer to save the HTML file directly to disk so while I'm iterating on parsing the data I don't have to keep hitting the web server. So let's write a short script index.js to download the HTML as we did in Case 1.

const rp = require('request-promise-native');const fs = require('fs');async function downloadBoxScoreHtml() {// where to download the HTML fromconst uri = 'https://www.espn.com/nba/boxscore?gameId=401160888';// the output filenameconst filename = 'boxscore.html';// download the HTML from the web serverconsole.log(`Downloading HTML from ${uri}...`);const results = await rp({ uri: uri });// save the HTML to diskawait fs.promises.writeFile(filename, results);}async function main() {console.log('Starting...');await downloadBoxScoreHtml();console.log('Done!');}main();

Now run it with

node index.js

Great! With that, we've got our HTML saved to disk as boxscore.html. The request to download it was a lot simpler than in Case 1 when we had to add a bunch of headers— looks like ESPN is a little less defensive than NBA.com.

Before we start parsing the HTML, let's update the script so it only downloads the file if we don't already have it. This will make it easier to quickly iterate on the script by just re-running it. To do so, we add a check before making the request:

async function downloadBoxScoreHtml() {// where to download the HTML fromconst uri = 'https://www.espn.com/nba/boxscore?gameId=401160888';// the output filenameconst filename = 'boxscore.html';// check if we already have the fileconst fileExists = fs.existsSync(filename);if (fileExists) {console.log(`Skipping download for ${uri} since ${filename} already exists.`);return;}// download the HTML from the web serverconsole.log(`Downloading HTML from ${uri}...`);const results = await rp({ uri: uri });// save the HTML to diskawait fs.promises.writeFile(filename, results);}

Ok we're all set to start parsing the HTML for our data, but we'll need a new dependency: Cheerio.

Parse the HTML with Cheerio

The best way to pull out data from the HTML is to use an HTML parser like Cheerio. Some people will try to get by with regular expressions, but they are insufficient in general and are a pain in the butt to write anyway. Cheerio is kind of like our old friend jQuery but on the server-side— it's time to get those $s out again! What a time to be alive.

Back in the terminal, we can install cheerio by running:

npm install --save cheerio

To get started with using Cheerio, we need to pass it the HTML as a string for it to parse and make queryable. To do so, we run the load command:

const $ = cheerio.load('<html>...</html>')

We can read our HTML from disk and load it into cheerio:

// the input filenameconst htmlFilename = 'boxscore.html';// read the HTML from diskconst html = await fs.promises.readFile(htmlFilename);// parse the HTML with Cheerioconst $ = cheerio.load(html);

After the HTML has been parsed, we can query it by passing our selector to the $ function:

const $trs = $('.gamepackage-away-wrap tbody tr:not(.highlight)')

This gives us a selection containing the parsed <tr> nodes. We can verify it has the HTML we're interested in by running $.html on it:

console.log($.html($trs));

We get (after some formatting):

<tr><td class="name"><a name="&lpos=nba:game:boxscore:playercard" href="https://www.espn.com/nba/player/_/id/6440/tobias-harris" data-player-uid="s:40~l:46~a:6440"><span>T. Harris</span><span class="abbr">T. Harris</span></a><span class="position">SF</span></td><td class="min">38</td><td class="fg">7-17</td><td class="3pt">3-7</td><td class="ft">1-1</td><td class="oreb">0</td><td class="dreb">5</td><td class="reb">5</td><td class="ast">2</td><td class="stl">1</td><td class="blk">0</td><td class="to">2</td><td class="pf">3</td><td class="plusminus">-10</td><td class="pts">18</td></tr>...

Looks good. It seems like we can create an object mapping the class attribute of the <td> to the value contained inside of it. Let's iterate over each of the rows and pull out the data. Note we need to use toArray() to convert from a jQuery-style selection to a standard array to make iteration easier to reason about.

const values = $trs.toArray().map(tr => {// find all children <td>const tds = $(tr).find('td').toArray();// create a player object based on the <td> valuesconst player = {};for (td of tds) {// parse the <td>const $td = $(td);// map the td class attr to its valueconst key = $td.attr('class');const value = $td.text();player[key] = value;}return player;});

If we look at our values, we get something like:

[{"name": "T. HarrisT. HarrisSF","min": "38","fg": "7-17","3pt": "3-7","ft": "1-1","oreb": "0","dreb": "5","reb": "5","ast": "2","stl": "1","blk": "0","to": "2","pf": "3","plusminus": "-10","pts": "18"}...]

It's pretty close, but there are a few problems. Looks like the name is wrong and all the numbers are stored as strings. There are a number of ways to solve these problems, but we'll do a couple quick ones. To fix the name, notice that the HTML for the first column is actually a bit different than the others:

<td class="name"><a name="&lpos=nba:game:boxscore:playercard" href="https://www.espn.com/nba/player/_/id/6440/tobias-harris" data-player-uid="s:40~l:46~a:6440"><span>T. Harris</span><span class="abbr">T. Harris</span></a><span class="position">SF</span></td>

So let's handle the name column differently by selecting the first <span> in the <a> tag only.

for (td of tds) {const $td = $(td);// map the td class attr to its valueconst key = $td.attr('class');let value;if (key === 'name') {value = $td.find('a span:first-child').text();} else {value = $td.text();}player[key] = value;}

And since we know all the values are strings, we can do a simple check to try and make numbers be represented as numbers:

player[key] = isNaN(+value) ? value : +value;

With those changes, we now get the following results:

[{"name": "T. Harris","min": 38,"fg": "7-17","3pt": "3-7","ft": "1-1","oreb": 0,"dreb": 5,"reb": 5,"ast": 2,"stl": 1,"blk": 0,"to": 2,"pf": 3,"plusminus": -10,"pts": 18},...]

Good enough for me. With these values parsed, all that's left is to save them to the disk and we're done.

// save the scraped results to diskawait fs.promises.writeFile('boxscore.json',JSON.stringify(values, null, 2));

And there we have it, we've downloaded HTML with data already rendered into it, loaded and parsed it with Cheerio, then saved the JSON we care about to its own file. Consider ESPN scraped. Here's the full script:

const rp = require('request-promise-native');const fs = require('fs');const cheerio = require('cheerio');async function downloadBoxScoreHtml() {// where to download the HTML fromconst uri = 'https://www.espn.com/nba/boxscore?gameId=401160888';// the output filenameconst filename = 'boxscore.html';// check if we already have the fileconst fileExists = fs.existsSync(filename);if (fileExists) {console.log(`Skipping download for ${uri} since ${filename} already exists.`);return;}// download the HTML from the web serverconsole.log(`Downloading HTML from ${uri}...`);const results = await rp({ uri: uri });// save the HTML to diskawait fs.promises.writeFile(filename, results);}async function parseBoxScore() {console.log('Parsing box score HTML...');// the input filenameconst htmlFilename = 'boxscore.html';// read the HTML from diskconst html = await fs.promises.readFile(htmlFilename);// parse the HTML with Cheerioconst $ = cheerio.load(html);// Get our rowsconst $trs = $('.gamepackage-away-wrap tbody tr:not(.highlight)');const values = $trs.toArray().map(tr => {// find all children <td>const tds = $(tr).find('td').toArray();// create a player object based on the <td> valuesconst player = {};for (td of tds) {const $td = $(td);// map the td class attr to its valueconst key = $td.attr('class');let value;if (key === 'name') {value = $td.find('a span:first-child').text();} else {value = $td.text();}player[key] = isNaN(+value) ? value : +value;}return player;});return values;}async function main() {console.log('Starting...');await downloadBoxScoreHtml();const boxScore = await parseBoxScore();// save the scraped results to diskawait fs.promises.writeFile('boxscore.json',JSON.stringify(boxScore, null, 2));console.log('Done!');}main();

By extracting the URL and the filenames into parameters, we could run this for script for many games, but I'll leave that as an exercise for the reader.

Case 3 – JavaScript Rendered HTML

Dang that's a lot of scraping already, I'm ready for a nap. But we've got one more case to go: scraping the HTML after javascript has run on the page. This case can sometimes be handled by Case 1 by intercepting API requests directly, but it may be easier to just get the HTML and work with it. The request library we've been using thus far is insufficient for these purposes — it just downloads HTML, it doesn't run any JavaScript on the page. We'll need to turn to the ultra powerful Puppeteer to get the job done.

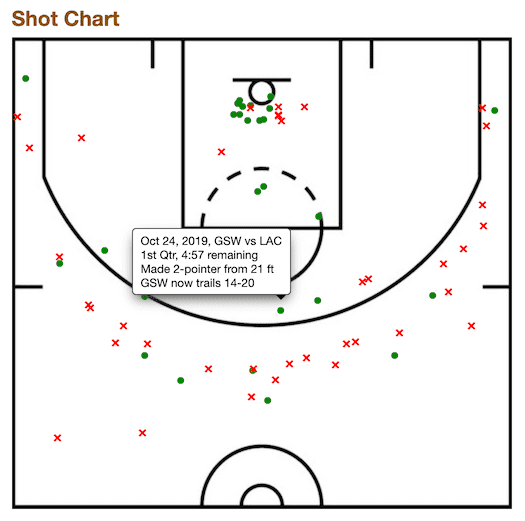

For this final case, we'll scrape some Steph Curry shooting data from Basketball Reference. Tired of basketball yet? Sorry.

Write a Node.js script to scrape the page after running JavaScript

Our goal will be to scrape the data from this lovely shot chart on Steph Curry's 2019-20 shooting page: https://www.basketball-reference.com/players/c/curryst01/shooting/2020.

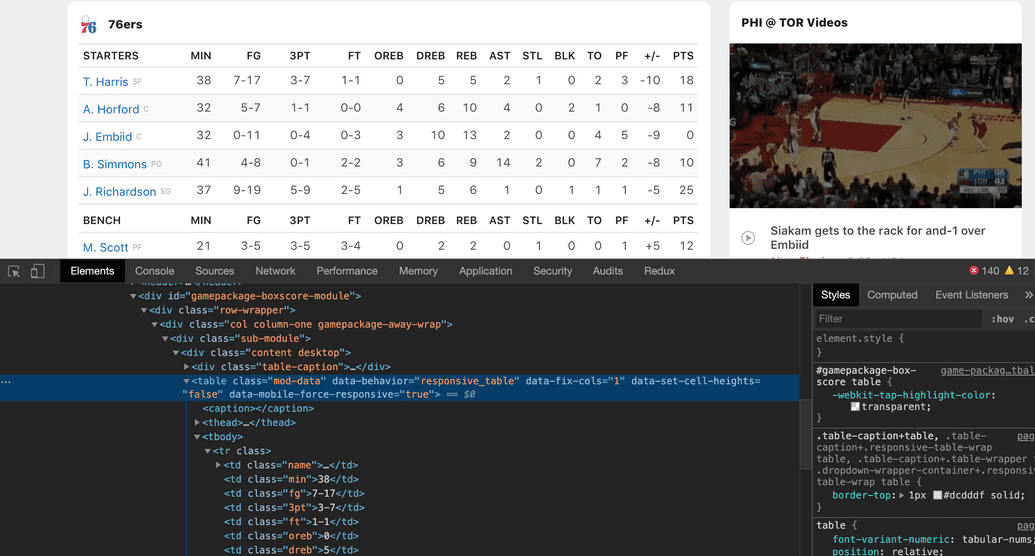

By right clicking and inspecting one of the ● or × symbols (similar to how we did it in cases 1 and 2), we can see they are nested <div>s:

<div class="table_outer_container"><div class="overthrow table_container" id="div_shot-chart"><div id="shot-wrapper"><div class="shot-area"><img ...><divstyle="top:331px;left:259px;"tip="Oct 24, 2019, GSW vs LAC<br>1st Qtr, 10:02 remaining<br>Missed 3-pointer from 28 ft<br>GSW trails 0-5"class="tooltip miss">×</div>...<divstyle="top:263px;left:264px;"tip="Oct 24, 2019, GSW vs LAC<br>1st Qtr, 4:57 remaining<br>Made 2-pointer from 21 ft<br>GSW now trails 14-20"class="tooltip make">●</div>...

Using the browser console (View → Developer → Javascript Console), we can find a selector (in a similar way as we did in case 2) that works to get us all the shot <div>s:

document.querySelectorAll('.shot-area > div')> NodeList(66) [div.tooltip.miss, div.tooltip.miss, div.tooltip.miss, div.tooltip.make, div.tooltip.make, ...]

With that selector handy, let's head on over to Node.js land and write a script to get us this data.

Set up Puppeteer

Let's set up a new javascript project by running these commands in your terminal:

npm init -ynpm install --save cheerio puppeteer

Now Puppeteer is quite a bit more complex than request, and I don't claim to be an expert but I'll share what has worked for me. Puppeteer works by running a headless Chrome browser so it can in theory do everything that your browser can. To get it started, we need to import puppeteer and launch the browser.

const puppeteer = require('puppeteer');async function main() {console.log('Starting...');const browser = await puppeteer.launch();// TODO download the HTML after running js on the pageawait browser.close();console.log('Done!');}main();

Next, we need to create a new "page" that we can use to fetch the HTML with. I like to have a helper function to create the page where I can include all the options I want for all my requests. There may be other ways to do this, but this has worked for me. In particular, I set the timeout to 20 seconds (perhaps too generous, but puppeteer can be slow sometimes!) and to provide a spoofed User Agent string.

async function newPage(browser) {// get a new pagepage = await browser.newPage();page.setDefaultTimeout(20000); // 20s// spoof user agentawait page.setUserAgent('Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/47.0.2526.111 Safari/537.36');// pretend to be desktopawait page.setViewport({width: 1980,height: 1080,});return page;}

With the page all set up, we need to do the actual fetching and running of the JS. I use another helper function to do this. Note that we must have waitUntil: 'domcontentloaded' to ensure the initial javascript on the page has been run.

async function fetchUrl(browser, url) {const page = await newPage(browser);await page.goto(url, {timeout: 20000,waitUntil: 'domcontentloaded'});const html = await page.content();await page.close();return html;}

I've found it to be most reliable to create a new page for each request, otherwise puppeteer seems to hang at page.content() occasionally.

Download the HTML page

With these two functions, we can set up our code to download the shooting data:

async function downloadShootingData(browser) {const url = 'https://www.basketball-reference.com/players/c/curryst01/shooting/2020';const htmlFilename = 'shots.html';// download the HTML from the web serverconsole.log(`Downloading HTML from ${url}...`);const html = await fetchUrl(browser, url);// save the HTML to diskawait fs.promises.writeFile(htmlFilename, html);}

Again, I recommend checking to see if you already have the file before running the fetchUrl function since puppeteer can be pretty slow.

async function downloadShootingData(browser) {const url = 'https://www.basketball-reference.com/players/c/curryst01/shooting/2020';const htmlFilename = 'shots.html';// check if we already have the fileconst fileExists = fs.existsSync(htmlFilename);if (fileExists) {console.log(`Skipping download for ${url} since ${htmlFilename} already exists.`);return;}// download the HTML from the web serverconsole.log(`Downloading HTML from ${url}...`);const html = await fetchUrl(browser, url);// save the HTML to diskawait fs.promises.writeFile(htmlFilename, html);}

Now we just need to call this function after we've launched the browser:

async function main() {console.log('Starting...');// download the HTML after javascript has runconst browser = await puppeteer.launch();await downloadShootingData(browser);await browser.close();console.log('Done!');}

Then run the program:

node index.js

By george, we've got it! Check shots.html and you'll see all the shooting <div>s are sitting right there waiting to be parsed and saved.

Parse the HTML with Cheerio

At this point, the process of parsing is the same as we did in case 2. We have an HTML document and we want to extract data using a selector (.shot-area > div) we've already figured out. Looking at the shot <div>s again, what data can we get from it?

<divstyle="top:331px;left:259px;"tip="Oct 24, 2019, GSW vs LAC<br>1st Qtr, 10:02 remaining<br>Missed 3-pointer from 28 ft<br>GSW trails 0-5"class="tooltip miss">×</div>

How about we grab the x and y position from the style attribute, the point value of the shot (2-pointer or 3-pointer) from the tip attribute, and whether the shot was made or missed from the class attribute.

Just as before, let's load the HTML file and parse it with cheerio:

async function parseShots() {console.log('Parsing shots HTML...');// the input filenameconst htmlFilename = 'shots.html';// read the HTML from diskconst html = await fs.promises.readFile(htmlFilename);// parse the HTML with Cheerioconst $ = cheerio.load(html);// for each of the shot divs, convert to JSONconst divs = $('.shot-area > div').toArray();// TODO: convert divs to shot JSON objectsreturn shots;}

We've got the <div>s as an array now, so let's iterate over them and map them to JSON objects converting each of the attributes we mentioned above.

const shots = divs.map(div => {const $div = $(div);// style="left:50px;top:120px" -> x = 50, y = 120// slice -2 to drop "px", prefix with `+` to make a numberconst x = +$div.css('left').slice(0, -2);const y = +$div.css('top').slice(0, -2);// class="tooltip make" or "tooltip miss"const madeShot = $div.hasClass('make');// tip="...Made 3-pointer..."const shotPts = $div.attr('tip').includes('3-pointer') ? 3 : 2;return { x, y, madeShot, shotPts };});

This should give us output like the following:

[{"x": 259,"y": 331,"madeShot": false,"shotPts": 3},...]

We just need to hook up the parseShots() function to our main() function and then save the results to disk:

async function main() {console.log('Starting...');// download the HTML after javascript has runconst browser = await puppeteer.launch();await downloadShootingData(browser);await browser.close();// parse the HTMLconst shots = await parseShots();// save the scraped results to diskawait fs.promises.writeFile('shots.json', JSON.stringify(shots, null, 2));console.log('Done!');}

Check the disk for shots.json and you should see the results. At last, we've done it.

Here's the full script:

const fs = require('fs');const cheerio = require('cheerio');const puppeteer = require('puppeteer');const TIMEOUT = 20000; // 20s timeout with puppeteer operationsconst USER_AGENT ='Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/47.0.2526.111 Safari/537.36';async function newPage(browser) {// get a new pagepage = await browser.newPage();page.setDefaultTimeout(TIMEOUT);// spoof user agentawait page.setUserAgent(USER_AGENT);// pretend to be desktopawait page.setViewport({width: 1980,height: 1080,});return page;}async function fetchUrl(browser, url) {const page = await newPage(browser);await page.goto(url, { timeout: TIMEOUT, waitUntil: 'domcontentloaded' });const html = await page.content(); // sometimes this seems to hang, so now we create a new page each timeawait page.close();return html;}async function downloadShootingData(browser) {const url ='https://www.basketball-reference.com/players/c/curryst01/shooting/2020';const htmlFilename = 'shots.html';// check if we already have the fileconst fileExists = fs.existsSync(htmlFilename);if (fileExists) {console.log(`Skipping download for ${url} since ${htmlFilename} already exists.`);return;}// download the HTML from the web serverconsole.log(`Downloading HTML from ${url}...`);const html = await fetchUrl(browser, url);// save the HTML to diskawait fs.promises.writeFile(htmlFilename, html);}async function parseShots() {console.log('Parsing shots HTML...');// the input filenameconst htmlFilename = 'shots.html';// read the HTML from diskconst html = await fs.promises.readFile(htmlFilename);// parse the HTML with Cheerioconst $ = cheerio.load(html);// for each of the shot divs, convert to JSONconst divs = $('.shot-area > div').toArray();const shots = divs.map(div => {const $div = $(div);// style="left:50px;top:120px" -> x = 50, y = 120const x = +$div.css('left').slice(0, -2);const y = +$div.css('top').slice(0, -2);// class="tooltip make" or "tooltip miss"const madeShot = $div.hasClass('make');// tip="...Made 3-pointer..."const shotPts = $div.attr('tip').includes('3-pointer') ? 3 : 2;return {x,y,madeShot,shotPts,};});return shots;}async function main() {console.log('Starting...');// download the HTML after javascript has runconst browser = await puppeteer.launch();await downloadShootingData(browser);await browser.close();// parse the HTMLconst shots = await parseShots();// save the scraped results to diskawait fs.promises.writeFile('shots.json', JSON.stringify(shots, null, 2));console.log('Done!');}main();

And we're all done with case 3! We've successfully downloaded a page after running JavaScript on it, parsed the HTML in it and extracted the data into a JSON file for future analysis.

That's a wrap

Thanks for following along, hopefully you've now got a better understanding of how to sniff out APIs you want to scrape and how to write node.js scripts to do the scraping. If you've got any suggestions for improvement, comments on the article, or requests for other posts, you can find me on Twitter @pbesh.